Jan 21, 2010:

This is the software renderer for Orkid I just started. It is a side project to address my interest in software renderers. I also have a practical need for it, and I will not be rushing this out. I made a simple raytracer for baking lightmaps in the last project I worked on and this renderer when finished will likely take its place. Previously, I had also tried using Pixie and Gelato (REYES renderers) for baking lightmaps, AO, etc…. And while they do work, they were buggy and the way they go about baking to a map with non overlapping UV’s is horribly inefficient. They are designed to render to a camera view or to a ‘spatial database’, and in either case you have to turn visibility culling and view based dicing off and they end up doing way too much work (time and memory) for what you need. The ideal baker rasterizes triangles directly into UV space. The ideal baker can still use raytracing or whatever other method it needs to compute the color, but the decision of which pixels to actually compute falls to the UV space rasterizer. I am sure these are issues that Turtle has had to deal with.

This renderer is heavily threaded via a thread pool with concurrent job queues and other atomic building blocks. The transformation pipeline is data parallel (fixed at 64 jobs). Each job handles 1/64th of the polys. Each transformation pipeline job distributes the poly to the appropriate tiles (buckets). Each bucket can atomically add polys without locks, so that each bucket can receive polys from multiple threads efficiently and concurrently. The tile rasterizers are also data parallel. The number of jobs is computed dynamically based on screensize. It divides the screen up into NxN pixel tiles (currently N=128). Task parallelism via the pipeline pattern is coming soon. It will require multi-buffered buckets.

It started out using a Z buffer, recently I switched to a REYES style A buffer/Compositor/Hider for per pixel z sorted translucent fragment lists. I will probably end up making it configurable, the Z buffer is much faster and will still come in handy sometimes. A Renderman like shader language or environment is planned. I plan on doing light shaders, surface shaders, atmospheric and volume shaders. I’m not sold on displacement shaders yet (normalmaps seem fine for the kind of things I plan on using this for).. I will also add in a trace family of functions for ray-tracing, AO and SSS baking.

All performance figures are for a dual Xeon 2.26ghz E5520 machine with all 8 cores (16 ht cores) enabled running Windows7 X64 with a 32bit executable. It is not SIMD optimized yet. I am also not using indexed triangles yet (and therefore also not using a post xform cache). While performance is important, I will be primarily aiming for image quality. Once I get the basic features ironed out and solid, I am going to move a few pipeline stages over to OpenCL/CUDA. I could probably save a lot of time by using CUDA/OpenCL directly as the shading language. The model is shown here with only back faces so you can see the internal complexity of the object (usually it would be hidden by the outer walls). The test model has approximately 350K triangles.

Note: some video captures are colored incorrectly because Fraps has a swizzling/surface format bug with direct2d, I believe R and B are swapped. I have recently switched to using ffmpeg, these captures are both fine and dandy.

(Version: Feb04)

Next I am going to see if porting the triangle scan converter and aa resolver to OpenCL help performance.

Sharing buffers between stages should get me less host<>opencl data movement traffic.

Or maybe I should do a ray marching volume shader. So many choices…

(Version: Feb01)

It is currently a bit slower on a Geforce260core216 than a 2x Xeon E5520, This is most likely due to:

- Very simple shader (barycentric->color with no conditionals), => The math/overhead ratio is lower. ( source code to shader: here ) .

- The need for locking (OpenCL cannot enqueue kernels from multiple threads, so only 1 tile buffer can access the OpenCL device at a time). The CPU version needs absolutely no locking, as it relies upon atomic operations when dealing with concurrent containers.

- PCI-Express data movement overhead.

- Bottleneck elsewhere in the pipeline.

- Shiny new untested OpenCL drivers?.

The Performance gap should shift drastically into OpenCL’s favor when I get a more complicated shader in..

Heres a more recent performance table.

A-Buffer enabled.

Backface culling off.

All fragments 50% translucent for A-Buffer performance testing.

32×32 tile size (or 32x32x3x3 for 9xAA).

16 CPU threads.

2x Xeon E5520.

Windows7 X64

Geforce260Core216 (196.21 drivers).

OpenCL (hacked NVIDIA SDK 2.3a with ATI stream headers and static library).

Local WorkGroup Size: 512 (or 1 – does not make a difference)

Still ~350K triangles without shared vertices.

Average Depth Complexity==2

| AAMode/Res | Frames/Sec | OpenCL | Notes |

| No AA 640×480 | 9.993 FPS | Disabled | *10.57 FPS (Shade: Enabled, Resolve: Disabled) |

| No AA 640×480 | 8.12 FPS | Enabled | *8.55 FPS (Shade: Enabled, Resolve: Disabled) |

| No AA 1920×1080 | 3.87 FPS | Disabled | |

| No AA 1920×1080 | 2.43 FPS | Enabled | |

| 9X AA 1920×1080 | 0.672 FPS | Disabled | *0.814 FPS (Shade: Enabled, Resolve: Disabled) |

| 9X AA 1920×1080 | 0.593 FPS | Enabled | *0.657 FPS (Shade: Enabled, Resolve: Disabled) |

(Version: Jan29)

720p Always

(Version: Jan28)

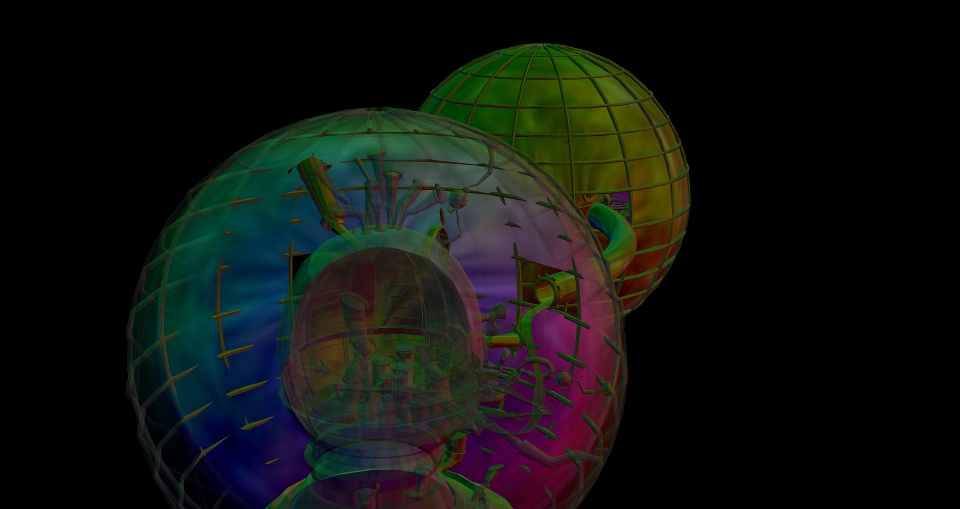

It is a composite of object-space face normals, a grid in object-space, and a single octave of object-space noise modulating another grid.

Spent way too much time fiddling with ffmpeg and YouTube trying to get a decent quality video. At least now I have a reasonable transcoding process ironed out..

Also recommended in 1280×720.

(Version: Jan26)

I recommend this one in 1280×720.

Here you can see the A-Buffer working.

The triangles are colorized with their barycentric coordinates, this help visualize the mesh topology, and also depth complexity.

(Version: Jan25)

Here is the current performance table with Perspective correct UV interpolation and differing AA modes.

Note: I am not filling the screen, the performance stats represent the screen fill coverage that you see in the videos. However, Overdraw and AA-Resolve are included.

There is 1 tile buffer per thread in the threadpool (currently 32). With fixed assignment, this removes the need for locking on tile buffers and still allows concurrency.

| AAMode/Res | Frames/Sec | MPix/Sec | TileBuffer Ram (RGBAZ) (32x128x128xAA) |

| No AA 1920×1080 | ~20 FPS | ~41 MPPS | ~4MB (32x128x128x1) |

| 4xAA 1920×1080 | ~15 FPS | ~124 MPPS | ~16MB (32x128x128x4) |

| 9xAA 1920×1080 | ~9 FPS | ~168 MPPS | ~36MB (32x128x128x9) |

| 16xAA 1920×1080 | ~7 FPS | ~232 MPPS | ~64MB (32x128x128x16) |

| 25xAA 1920×1080 | ~5 FPS | ~259 MPPS | ~100MB (32x128x128x25) |

| 49xAA 1920×1080 | ~2.8 FPS | ~284 MPPS | ~205MB (32x128x128x49) |

| 100xAA 1920×1080 | ~1.5 FPS | ~315 MPPS | ~400MB (32x128x128x100) |

| 256xAA 1920×1080 | ~0.868 FPS | ~461 MPPS | ~256MB (32x64x64x256) |

(Version: Jan24)

It currently runs at interactive rates (~25-28hz) at 1920×1080.

Currently it is more geometry bound than fill bound, this will change of course when I start sampling textures and computing shaders.

(Version: Jan21)

You can see that polys that span tile boundaries are currently dropped, this will be fixed soon.

This is just a little tool I made to debug my rasterizer’s pixel coverage and fill rules.

(Newer Version)

Fixed the fill rules

(Older Version)

Apparently, I still have some debugging to do with it ;>